mirror of

https://github.com/alibaba/DataX.git

synced 2025-05-02 11:11:08 +08:00

Merge pull request #2283 from xxsc0529/master

[FEATURE]:oceanbase plugin add direct path support

This commit is contained in:

commit

d82a5aeae6

231

oceanbasev10reader/doc/oceanbasev10reader.md

Normal file

231

oceanbasev10reader/doc/oceanbasev10reader.md

Normal file

@ -0,0 +1,231 @@

|

||||

## 1 快速介绍

|

||||

OceanbaseV10Reader插件实现了从Oceanbase V1.0读取数据。在底层实现上,该读取插件通过java client(jdbc)连接远程Oceanbase 1.0数据库,并执行相应的sql语句将数据从库中SELECT出来。

|

||||

|

||||

注意,oceanbasev10reader只适用于ob1.0及以后版本的reader。

|

||||

|

||||

## 2 实现原理

|

||||

简而言之,Oceanbasev10Reader通过java client连接器连接到远程的Oceanbase数据库,并根据用户配置的信息生成查询SELECT SQL语句,然后发送到远程Oceanbase v1.0及更高版本数据库,并将该SQL执行返回结果使用DataX自定义的数据类型拼装为抽象的数据集,并传递给下游Writer处理。<br />对于用户配置Table、Column、Where的信息,OceanbaseV10Reader将其拼接为SQL语句发送到Oceanbase 数据库;对于用户配置querySql信息,Oceanbasev10Reader直接将其发送到Oceanbase数据库。

|

||||

## 3 功能说明

|

||||

### 3.1 配置样例

|

||||

|

||||

- 配置一个从Oceanbase数据库同步抽取数据到本地的作业:

|

||||

```

|

||||

{

|

||||

"job": {

|

||||

"setting": {

|

||||

"speed": {

|

||||

//设置传输速度,单位为byte/s,DataX运行会尽可能达到该速度但是不超过它.

|

||||

"byte": 1048576

|

||||

}

|

||||

//出错限制

|

||||

"errorLimit": {

|

||||

//出错的record条数上限,当大于该值即报错。

|

||||

"record": 0,

|

||||

//出错的record百分比上限 1.0表示100%,0.02表示2%

|

||||

"percentage": 0.02

|

||||

}

|

||||

},

|

||||

"content": [

|

||||

{

|

||||

"reader": {

|

||||

"name": "oceanbasev10reader",

|

||||

"parameter": {

|

||||

"where": "",

|

||||

"timeout": 5,

|

||||

"readBatchSize": 50000,

|

||||

"column": [

|

||||

"id","name"

|

||||

],

|

||||

"connection": [

|

||||

{

|

||||

"jdbcUrl": ["||_dsc_ob10_dsc_||集群名:租户名||_dsc_ob10_dsc_||jdbc:mysql://obproxyIp:obproxyPort/dbName"],

|

||||

"table": [

|

||||

"table"

|

||||

]

|

||||

}

|

||||

]

|

||||

}

|

||||

},

|

||||

"writer": {

|

||||

//writer类型

|

||||

"name": "streamwriter",

|

||||

//是否打印内容

|

||||

"parameter": {

|

||||

"print":true,

|

||||

}

|

||||

}

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

```

|

||||

```

|

||||

{

|

||||

"job": {

|

||||

"setting": {

|

||||

"speed": {

|

||||

"channel": 3

|

||||

},

|

||||

"errorLimit": {

|

||||

"record": 0

|

||||

}

|

||||

},

|

||||

"content": [

|

||||

{

|

||||

"reader": {

|

||||

"name": "oceanbasev10reader",

|

||||

"parameter": {

|

||||

"where": "",

|

||||

"timeout": 5,

|

||||

"fetchSize": 500,

|

||||

"column": [

|

||||

"id",

|

||||

"name"

|

||||

],

|

||||

"splitPk": "pk",

|

||||

"connection": [

|

||||

{

|

||||

"jdbcUrl": ["||_dsc_ob10_dsc_||集群名:租户名||_dsc_ob10_dsc_||jdbc:mysql://obproxyIp:obproxyPort/dbName"],

|

||||

"table": [

|

||||

"table"

|

||||

]

|

||||

}

|

||||

],

|

||||

"username":"xxx",

|

||||

"password":"xxx"

|

||||

}

|

||||

},

|

||||

"writer": {

|

||||

"name": "streamwriter",

|

||||

"parameter": {

|

||||

"print": true

|

||||

}

|

||||

}

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

- 配置一个自定义SQL的数据库同步任务到本地内容的作业:

|

||||

```

|

||||

{

|

||||

"job": {

|

||||

"setting": {

|

||||

"channel": 3

|

||||

},

|

||||

"content": [

|

||||

{

|

||||

"reader": {

|

||||

"name": "oceanbasev10reader",

|

||||

"parameter": {

|

||||

"timeout": 5,

|

||||

"fetchSize": 500,

|

||||

"splitPk": "pk",

|

||||

"connection": [

|

||||

{

|

||||

"jdbcUrl": ["||_dsc_ob10_dsc_||集群名:租户名||_dsc_ob10_dsc_||jdbc:mysql://obproxyIp:obproxyPort/dbName"],

|

||||

"querySql": [

|

||||

"select db_id,on_line_flag from db_info where db_id < 10;"

|

||||

]

|

||||

}

|

||||

],

|

||||

"username":"xxx",

|

||||

"password":"xxx"

|

||||

}

|

||||

},

|

||||

"writer": {

|

||||

"name": "streamwriter",

|

||||

"parameter": {

|

||||

"print": false,

|

||||

"encoding": "UTF-8"

|

||||

}

|

||||

}

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

```

|

||||

### 3.2 参数说明

|

||||

|

||||

- **jdbcUrl**

|

||||

- 描述:连接ob使用的jdbc url,支持两种格式:

|

||||

- ||_dsc_ob10_dsc_||集群名:租户名||_dsc_ob10_dsc_||jdbc:mysql://obproxyIp:obproxyPort/db

|

||||

- 此格式下username仅填写用户名本身,无需三段式写法

|

||||

- jdbc:mysql://ip:port/db

|

||||

- 此格式下username需要三段式写法

|

||||

- 必选:是

|

||||

- 默认值:无

|

||||

- **table**

|

||||

- 描述:所选取的需要同步的表。使用JSON的数组描述,因此支持多张表同时抽取。当配置为多张表时,用户自己需保证多张表是同一schema结构,OceanbaseReader不予检查表是否同一逻辑表。注意,table必须包含在connection配置单元中。

|

||||

- 必选:是

|

||||

- 默认值:无

|

||||

- **column**

|

||||

- 描述:所配置的表中需要同步的列名集合,使用JSON的数组描述字段信息。

|

||||

- 支持列裁剪,即列可以挑选部分列进行导出。

|

||||

```

|

||||

支持列换序,即列可以不按照表schema信息进行导出,同时支持通配符*,在使用之前需仔细核对列信息。

|

||||

```

|

||||

|

||||

- 必选:是

|

||||

- 默认值:无

|

||||

- **where**

|

||||

- 描述:筛选条件,OceanbaseReader根据指定的column、table、where条件拼接SQL,并根据这个SQL进行数据抽取。在实际业务场景中,往往会选择当天的数据进行同步,可以将where条件指定为gmt_create > $bizdate 。这里gmt_create不可以是索引字段,也不可以是联合索引的第一个字段<br />。<br />where条件可以有效地进行业务增量同步。如果不填写where语句,包括不提供where的key或者value,DataX均视作同步全量数据

|

||||

- 必选:否

|

||||

- 默认值:无

|

||||

- **splitPk**

|

||||

- 描述:OBReader进行数据抽取时,如果指定splitPk,表示用户希望使用splitPk代表的字段进行数据分片,DataX因此会启动并发任务进行数据同步,这样可以大大提供数据同步的效能。

|

||||

- 推荐splitPk用户使用表主键,因为表主键通常情况下比较均匀,因此切分出来的分片也不容易出现数据热点。

|

||||

- 目前splitPk仅支持int数据切分,`不支持其他类型`。如果用户指定其他非支持类型将报错。<br />splitPk如果不填写,将视作用户不对单表进行切分,OBReader使用单通道同步全量数据。

|

||||

- 必选:否

|

||||

- 默认值:空

|

||||

- **querySql**

|

||||

- 描述:在有些业务场景下,where这一配置项不足以描述所筛选的条件,用户可以通过该配置型来自定义筛选SQL。当用户配置了这一项之后,DataX系统就会忽略table,column这些配置型,直接使用这个配置项的内容对数据进行筛选

|

||||

- `当用户配置querySql时,OceanbaseReader直接忽略table、column、where条件的配置`,querySql优先级大于table、column、where选项。

|

||||

- 必选:否

|

||||

- 默认值:无

|

||||

- **timeout**

|

||||

- 描述:sql执行的超时时间 单位分钟

|

||||

- 必选:否

|

||||

- 默认值:5

|

||||

- **username**

|

||||

- 描述:访问oceanbase的用户名

|

||||

- 必选:是

|

||||

- 默认值:无

|

||||

- ** password**

|

||||

- 描述:访问oceanbase的密码

|

||||

- 必选:是

|

||||

- 默认值:无

|

||||

- **readByPartition**

|

||||

- 描述:对分区表是否按照分区切分任务

|

||||

- 必选:否

|

||||

- 默认值:fasle

|

||||

- **readBatchSize**

|

||||

- 描述:一次读取的行数,如果遇到内存不足的情况,可将该值调小

|

||||

- 必选:否

|

||||

- 默认值:10000

|

||||

### 3.3 类

|

||||

### 3.3 类型转换

|

||||

下面列出OceanbaseReader针对Oceanbase类型转换列表:

|

||||

|

||||

| DataX 内部类型 | Oceanbase 数据类型 |

|

||||

| --- | --- |

|

||||

| Long | int |

|

||||

| Double | numeric |

|

||||

| String | varchar |

|

||||

| Date | timestamp |

|

||||

| Boolean | bool |

|

||||

|

||||

## 4性能测试

|

||||

### 4.1 测试报告

|

||||

影响速度的主要原因在于channel数量,channel值受限于分表的数量或者单个表的数据分片数量<br />单表导出时查看分片数量的办法,idb执行`select/*+query_timeout(150000000)*/ s.tablet_count from __all_table t,__table_stat s where t.table_id = s.table_id and t.table_name = '表名'`

|

||||

|

||||

| 通道数 | DataX速度(Rec/s) | DataX流量(MB/s) |

|

||||

| --- | --- | --- |

|

||||

| 1 | 15001 | 4.7 |

|

||||

| 2 | 28169 | 11.66 |

|

||||

| 3 | 37076 | 14.77 |

|

||||

| 4 | 55862 | 17.60 |

|

||||

| 5 | 70860 | 22.31 |

|

||||

|

||||

#

|

||||

363

oceanbasev10writer/doc/oceanbasev10writer.md

Normal file

363

oceanbasev10writer/doc/oceanbasev10writer.md

Normal file

@ -0,0 +1,363 @@

|

||||

## 1 快速介绍

|

||||

OceanBaseV10Writer 插件实现了写入数据到 OceanBase V1.0以及更高版本数据库的目的表的功能。在底层实现上, OceanbaseV10Writer 通过 java客户端(底层MySQL JDBC或oceanbase client) 连接obproxy远程 OceanBase 数据库,并执行相应的 insert .. on duplicate key update这条sql 语句将数据写入 OceanBase ,内部会分批次提交入库。

|

||||

Oceanbasev10Writer 面向ETL开发工程师,他们使用 Oceanbasev10Writer 从数仓导入数据到 Oceanbase。同时 Oceanbasev10Writer 亦可以作为数据迁移工具为DBA等用户提供服务。

|

||||

|

||||

注意,oceanbasewriter是ob 0.5的writer,oceanbasev10writer是ob 1.0及以后版本的writer。

|

||||

|

||||

## 2 实现原理

|

||||

Oceanbasev10Writer 通过 DataX 框架获取 Reader 生成的协议数据,生成insert ... on duplicate key update语句,在主键或唯一键冲突时,更新表中的所有字段。目前只有这一种行为,写入模式(只写入不更新)和更新指定字段目前暂未支持。 出于性能考虑,写入采用batch方式批量写,当行数累计到预定阈值时,才发起写入请求。

|

||||

插件连接ob使用Mysql/Oceanbase JDBC driver通过obproxy连接ob;

|

||||

|

||||

## 3 功能说明

|

||||

### 3.1 配置样例

|

||||

|

||||

- 这里使用一份从内存产生到 Oceanbase 导入的数据。

|

||||

```

|

||||

{

|

||||

"job": {

|

||||

"setting": {

|

||||

"speed": {

|

||||

"channel": 1

|

||||

},

|

||||

"errorLimit": {

|

||||

"record": 1

|

||||

}

|

||||

},

|

||||

"content": [

|

||||

{

|

||||

"reader": {

|

||||

"name": "streamreader",

|

||||

"parameter": {

|

||||

"column" : [

|

||||

{

|

||||

"value": "DataX",

|

||||

"type": "string"

|

||||

},

|

||||

{

|

||||

"value": 19880808,

|

||||

"type": "long"

|

||||

},

|

||||

{

|

||||

"value": "1988-08-08 08:08:08",

|

||||

"type": "date"

|

||||

},

|

||||

{

|

||||

"value": true,

|

||||

"type": "bool"

|

||||

},

|

||||

{

|

||||

"value": "test",

|

||||

"type": "bytes"

|

||||

}

|

||||

],

|

||||

"sliceRecordCount": 1000

|

||||

}

|

||||

},

|

||||

"writer": {

|

||||

"name": "oceanbasev10writer",

|

||||

"parameter": {

|

||||

"obWriteMode": "update",

|

||||

"column": [

|

||||

"id",

|

||||

"name"

|

||||

],

|

||||

"preSql": [

|

||||

"delete from test"

|

||||

],

|

||||

"connection": [

|

||||

{

|

||||

"jdbcUrl": "||_dsc_ob10_dsc_||集群名:租户名||_dsc_ob10_dsc_||jdbc:mysql://obproxyIp:obproxyPort/dbName",

|

||||

"table": [

|

||||

"test"

|

||||

]

|

||||

}

|

||||

],

|

||||

"username": "xxx",

|

||||

"password":"xxx",

|

||||

"batchSize": 256,

|

||||

"memstoreThreshold": "0.9"

|

||||

}

|

||||

}

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

```

|

||||

- 这里使用一份从内存产生到 Oceanbase 旁路导入的数据。

|

||||

```

|

||||

{

|

||||

"job": {

|

||||

"setting": {

|

||||

"speed": {

|

||||

"channel": 1

|

||||

},

|

||||

"errorLimit": {

|

||||

"record": 1

|

||||

}

|

||||

},

|

||||

"content": [

|

||||

{

|

||||

"reader": {

|

||||

"name": "streamreader",

|

||||

"parameter": {

|

||||

"column" : [

|

||||

{

|

||||

"value": "DataX",

|

||||

"type": "string"

|

||||

},

|

||||

{

|

||||

"value": 19880808,

|

||||

"type": "long"

|

||||

},

|

||||

{

|

||||

"value": "1988-08-08 08:08:08",

|

||||

"type": "date"

|

||||

},

|

||||

{

|

||||

"value": true,

|

||||

"type": "bool"

|

||||

},

|

||||

{

|

||||

"value": "test",

|

||||

"type": "bytes"

|

||||

}

|

||||

],

|

||||

"sliceRecordCount": 1000

|

||||

}

|

||||

},

|

||||

"writer": {

|

||||

"name": "oceanbasev10writer",

|

||||

"parameter": {

|

||||

"obWriteMode": "update",

|

||||

"column": [

|

||||

"id",

|

||||

"name"

|

||||

],

|

||||

"preSql": [

|

||||

"delete from test"

|

||||

],

|

||||

"connection": [

|

||||

{

|

||||

"jdbcUrl": "||_dsc_ob10_dsc_||集群名:租户名||_dsc_ob10_dsc_||jdbc:mysql://obproxyIp:obproxyPort/dbName",

|

||||

"table": [

|

||||

"test"

|

||||

]

|

||||

}

|

||||

],

|

||||

"username": "xxx",

|

||||

"password":"xxx",

|

||||

"batchSize": 256,

|

||||

"directPath": true,

|

||||

"rpcPort": 2882,

|

||||

"parallel": 8,

|

||||

"heartBeatInterval": 1000,

|

||||

"heartBeatTimeout": 6000,

|

||||

"bufferSize": 1048576,

|

||||

"memstoreThreshold": "0.9"

|

||||

}

|

||||

}

|

||||

}

|

||||

]

|

||||

}

|

||||

}

|

||||

```

|

||||

### 3.2 参数说明

|

||||

|

||||

- **jdbcUrl**

|

||||

- 描述:连接ob使用的jdbc url,支持两种格式:

|

||||

- ||_dsc_ob10_dsc_||集群名:租户名||_dsc_ob10_dsc_||jdbc:mysql://obproxyIp:obproxyPort/db

|

||||

- 此格式下username仅填写用户名本身,无需三段式写法

|

||||

- jdbc:mysql://ip:port/db

|

||||

- 此格式下username需要三段式写法

|

||||

- 必选:是

|

||||

- 默认值:无

|

||||

- **table**

|

||||

- 描述:目的表的表名称。开源版obwriter插件仅支持写入一个表。表名中一般不含库名;

|

||||

- 必选:是

|

||||

- 默认值:无

|

||||

- **column**

|

||||

- 描述:目的表需要写入数据的字段,字段之间用英文逗号分隔。例如: "column": ["id","name","age"]。

|

||||

```

|

||||

**column配置项必须指定,不能留空!**

|

||||

注意:1、我们强烈不推荐你这样配置,因为当你目的表字段个数、类型等有改动时,你的任务可能运行不正确或者失败

|

||||

2、 column 不能配置任何常量值

|

||||

```

|

||||

|

||||

- 必选:是

|

||||

- 默认值:否

|

||||

- **preSql**

|

||||

- 描述:写入数据到目的表前,会先执行这里的标准语句。如果 Sql 中有你需要操作到的表名称,请使用 `@table` 表示,这样在实际执行 Sql 语句时,会对变量按照实际表名称进行替换。比如你的任务是要写入到目的端的100个同构分表(表名称为:datax_00,datax01, ... datax_98,datax_99),并且你希望导入数据前,先对表中数据进行删除操作,那么你可以这样配置:`"preSql":["delete from @table"]`,效果是:在执行到每个表写入数据前,会先执行对应的 delete from 对应表名称.只支持delete语句

|

||||

- 必选:否

|

||||

- 默认值:无

|

||||

- **batchSize**

|

||||

- 描述:一次性批量提交的记录数大小,该值可以极大减少DataX与Oceanbase的网络交互次数,并提升整体吞吐量。但是该值设置过大可能会造成DataX运行进程OOM情况。

|

||||

- 必选:否

|

||||

- 默认值:1000

|

||||

- **memstoreThreshold**

|

||||

- 描述:OB租户的memstore使用率,当达到这个阀值的时候暂停导入,等释放内存后继续导入. 防止租户内存溢出

|

||||

- 必选:否

|

||||

- 默认值:0.9

|

||||

- **username**

|

||||

- 描述:访问oceanbase的用户名。注意当jdbcUrl配置为||_dsc_ob10_dsc_||集群名:租户名||_dsc_ob10_dsc_||这样的格式时,此处不配置ob的集群名和租户名。否则需要配置为三段式形式。

|

||||

- 必选:是

|

||||

- 默认值:无

|

||||

- **** password****

|

||||

- 描述:访问oceanbase的密码

|

||||

- 必选:是

|

||||

- 默认值:无

|

||||

- writerThreadCount

|

||||

- 描述:每个通道(channel)中写入使用的线程数

|

||||

- 必选:否

|

||||

- 默认值:1

|

||||

- directPath

|

||||

- 描述:开启旁路导入

|

||||

- 必选:否

|

||||

- 默认值:false

|

||||

- rpcPort

|

||||

- 描述:oceanbase的rpc端口

|

||||

- 必选:否

|

||||

- 默认值:无

|

||||

- parallel

|

||||

- 描述:旁路导入的启用线程数

|

||||

- 必选:否

|

||||

- 默认值:1

|

||||

- bufferSize

|

||||

- 描述:旁路导入的切分数据块大小

|

||||

- 必选:否

|

||||

- 默认值:1048576

|

||||

- heartBeatInterval

|

||||

- 描述:旁路导入的心跳间隔

|

||||

- 必选:否

|

||||

- 默认值:1000

|

||||

- heartBeatTimeout

|

||||

- 描述:旁路导入的心跳超时时间

|

||||

- 必选:否

|

||||

- 默认值:6000

|

||||

```

|

||||

**开启了旁路导入,即directPath:true时**

|

||||

注意:1、此时rpcPort为必填项。

|

||||

2、设置parallel时,parallel和oceanbase的负载有关。

|

||||

3、设置heartBeatTimeout最低不能低于6000,heartBeatTimeout的值最低不能低于1000,

|

||||

当heartBeatTimeout和heartBeatTimeout同时设置时,heartBeatTimeout-heartBeatTimeout的差值不能低于4000。

|

||||

4、bufferSize的单位为字节数,默认为1M,即1048576。

|

||||

```

|

||||

|

||||

## 4 常见问题

|

||||

###

|

||||

4.1 连接断开导致写入失败

|

||||

Data X写入ob的任务失败,在log中可以发现在写入ob时,连接被断开:

|

||||

```

|

||||

2018-12-14 05:40:48.586 [18705170-3-17-writer] WARN CommonRdbmsWriter$Task - 遇到OB异常,回滚此次写入, 休眠 1秒,采用逐条写入提交,SQLState:S1000

|

||||

java.sql.SQLException: Could not retrieve transation read-only status server

|

||||

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:964) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:897) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:886) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:860) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:877) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:873) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.ConnectionImpl.isReadOnly(ConnectionImpl.java:3603) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.ConnectionImpl.isReadOnly(ConnectionImpl.java:3572) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.PreparedStatement.executeBatchInternal(PreparedStatement.java:1225) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.StatementImpl.executeBatch(StatementImpl.java:958) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.alibaba.datax.plugin.writer.oceanbasev10writer.task.MultiTableWriterTask.write(MultiTableWriterTask.java:357) [oceanbasev10writer-0.0.1-SNAPSHOT.jar:na]

|

||||

at com.alibaba.datax.plugin.writer.oceanbasev10writer.task.MultiTableWriterTask.calcRuleAndDoBatchInsert(MultiTableWriterTask.java:338) [oceanbasev10writer-0.0.1-SNAPSHOT.jar:na]

|

||||

at com.alibaba.datax.plugin.writer.oceanbasev10writer.task.MultiTableWriterTask.startWrite(MultiTableWriterTask.java:227) [oceanbasev10writer-0.0.1-SNAPSHOT.jar:na]

|

||||

at com.alibaba.datax.plugin.writer.oceanbasev10writer.OceanBaseV10Writer$Task.startWrite(OceanBaseV10Writer.java:360) [oceanbasev10writer-0.0.1-SNAPSHOT.jar:na]

|

||||

at com.alibaba.datax.core.taskgroup.runner.WriterRunner.run(WriterRunner.java:62) [datax-core-0.0.1-SNAPSHOT.jar:na]

|

||||

at java.lang.Thread.run(Thread.java:834) [na:1.8.0_112]

|

||||

Caused by: com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failure

|

||||

The last packet successfully received from the server was 5 milliseconds ago. The last packet sent successfully to the server was 4 milliseconds ago.

|

||||

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[na:1.8.0_112]

|

||||

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[na:1.8.0_112]

|

||||

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[na:1.8.0_112]

|

||||

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[na:1.8.0_112]

|

||||

at com.mysql.jdbc.Util.handleNewInstance(Util.java:425) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:989) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.MysqlIO.reuseAndReadPacket(MysqlIO.java:3556) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.MysqlIO.reuseAndReadPacket(MysqlIO.java:3456) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3897) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.MysqlIO.sendCommand(MysqlIO.java:2524) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.MysqlIO.sqlQueryDirect(MysqlIO.java:2677) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2545) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2503) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.StatementImpl.executeQuery(StatementImpl.java:1369) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.ConnectionImpl.isReadOnly(ConnectionImpl.java:3597) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

... 9 common frames omitted

|

||||

Caused by: java.io.EOFException: Can not read response from server. Expected to read 4 bytes, read 0 bytes before connection was unexpectedly lost.

|

||||

at com.mysql.jdbc.MysqlIO.readFully(MysqlIO.java:3008) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

at com.mysql.jdbc.MysqlIO.reuseAndReadPacket(MysqlIO.java:3466) ~[mysql-connector-java-5.1.40.jar:5.1.40]

|

||||

... 17 common frames omitted

|

||||

```

|

||||

关键字:could not retrieve transation status from read-only status server, communication link failure

|

||||

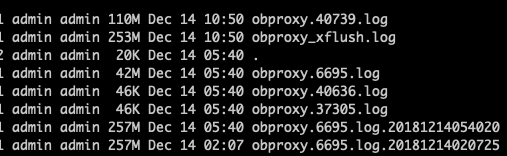

检查运行Data X任务的机器,发现obproxy在任务运行时发生若干次重启:

|

||||

|

||||

在第一次obproxy退出的日志里,找到退出原因:

|

||||

```

|

||||

[2018-12-14 05:40:47.611683] ERROR [PROXY] do_monitor_mem (ob_proxy_main.cpp:889) [7262][Y0-7F4480213880] [AL=47391-47390-29] obproxy's memroy is out of limit, will be going to commit suicide(mem_limited=838860800, OTHER_MEMORY_SIZE=73400320, is_out_of_mem_limit=true, cur_pos=9) BACKTRACE:0x49db91 0x47fdc9 0x43b115 0x43ee5d 0xa6e623 0xe401b2 0xe3f497 0x4f674c 0x7f4487ace77d 0x7f44865ed9ad

|

||||

[2018-12-14 05:40:47.612334] ERROR [PROXY] do_monitor_mem (ob_proxy_main.cpp:891) [7262][Y0-7F4480213880] [AL=47392-47391-651] history memory size, history_mem_size[0]=765460480 BACKTRACE:0x49db91 0x47fdc9 0x48717a 0x43f121 0xa6e623 0xe401b2 0xe3f497 0x4f674c 0x7f4487ace77d 0x7f44865ed9ad

|

||||

[2018-12-14 05:40:47.612934] ERROR [PROXY] do_monitor_mem (ob_proxy_main.cpp:891) [7262][Y0-7F4480213880] [AL=47393-47392-600] history memory size, history_mem_size[1]=765460480 BACKTRACE:0x49db91 0x47fdc9 0x48717a 0x43f121 0xa6e623 0xe401b2 0xe3f497 0x4f674c 0x7f4487ace77d 0x7f44865ed9ad

|

||||

[2018-12-14 05:40:47.613530] ERROR [PROXY] do_monitor_mem (ob_proxy_main.cpp:891) [7262][Y0-7F4480213880] [AL=47394-47393-596] history memory size, history_mem_size[2]=765460480 BACKTRACE:0x49db91 0x47fdc9 0x48717a 0x43f121 0xa6e623 0xe401b2 0xe3f497 0x4f674c 0x7f4487ace77d 0x7f44865ed9ad

|

||||

[2018-12-14 05:40:47.614121] ERROR [PROXY] do_monitor_mem (ob_proxy_main.cpp:891) [7262][Y0-7F4480213880] [AL=47395-47394-591] history memory size, history_mem_size[3]=765460480 BACKTRACE:0x49db91 0x47fdc9 0x48717a 0x43f121 0xa6e623 0xe401b2 0xe3f497 0x4f674c 0x7f4487ace77d 0x7f44865ed9ad

|

||||

[2018-12-14 05:40:47.614717] ERROR [PROXY] do_monitor_mem (ob_proxy_main.cpp:891) [7262][Y0-7F4480213880] [AL=47396-47395-596] history memory size, history_mem_size[4]=765460480 BACKTRACE:0x49db91 0x47fdc9 0x48717a 0x43f121 0xa6e623 0xe401b2 0xe3f497 0x4f674c 0x7f4487ace77d 0x7f44865ed9ad

|

||||

[2018-12-14 05:40:47.615307] ERROR [PROXY] do_monitor_mem (ob_proxy_main.cpp:891) [7262][Y0-7F4480213880] [AL=47397-47396-590] history memory size, history_mem_size[5]=765460480 BACKTRACE:0x49db91 0x47fdc9 0x48717a 0x43f121 0xa6e623 0xe401b2 0xe3f497 0x4f674c 0x7f4487ace77d 0x7f44865ed9ad

|

||||

```

|

||||

关键字:obproxy's memroy is out of limit, will be going to commit suicide

|

||||

可以看到,obproxy由于内存不足退出。

|

||||

#### 解决方案

|

||||

obproxy在启动时, 可以指定使用内存上限,默认是800M,在某些情况下,比如连接数较多(该失败的任务为写入100张分表,并发数32,因此连接数为3200),可能会导致obproxy内存不够用。要解决该问题,一方面可以调低任务的并发数,另一方面可以调大obproxy的内存限制,比如调整至2G。

|

||||

|

||||

### 4.2 Session interrupted

|

||||

在使用ob 1.0 writer往单表里写入数据时,遇到以下错误:

|

||||

|

||||

```

|

||||

2019-01-03 19:37:27.197 [0-insertTask-73] WARN InsertTask - Insert fatal error SqlState =HY000, errorCode = 5066, java.sql.SQLException: Session interrupted, server ip:port[11.145.28.93:2881]

|

||||

```

|

||||

关键字:fatal,Session interrupted,server ip:port

|

||||

在任务执行的log中,还可以发现如下log:

|

||||

|

||||

```

|

||||

2019-08-09 11:56:56.758 [2-insertTask-82] ERROR StdoutPluginCollector -

|

||||

java.sql.SQLException: Session interrupted, server ip:port[11.232.58.16:2881]

|

||||

at com.alipay.oceanbase.obproxy.connection.ObGroupConnection.checkAndThrowException(ObGroupConnection.java:431) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.statement.ObStatement.doExecute(ObStatement.java:598) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.statement.ObStatement.execute(ObStatement.java:456) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.statement.ObPreparedStatement.execute(ObPreparedStatement.java:148) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alibaba.datax.plugin.rdbms.writer.CommonRdbmsWriter$Task.doOneInsert(CommonRdbmsWriter.java:430) ~[plugin-rdbms-util-0.0.1-SNAPSHOT.jar:na]

|

||||

at com.alibaba.datax.plugin.writer.oceanbasev10writer.task.InsertTask.doMultiInsert(InsertTask.java:196) [oceanbasev10writer-0.0.1-SNAPSHOT.jar:na]

|

||||

at com.alibaba.datax.plugin.writer.oceanbasev10writer.task.InsertTask.run(InsertTask.java:85) [oceanbasev10writer-0.0.1-SNAPSHOT.jar:na]

|

||||

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1147) [na:1.8.0_112]

|

||||

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:622) [na:1.8.0_112]

|

||||

at java.lang.Thread.run(Thread.java:834) [na:1.8.0_112]

|

||||

Caused by: com.alipay.oceanbase.obproxy.mysql.jdbc.exceptions.jdbc4.MySQLSyntaxErrorException: INSERT command denied to user 'dwexp'@'%' for table 'mobile_product_version_info'

|

||||

at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) ~[na:1.8.0_112]

|

||||

at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:62) ~[na:1.8.0_112]

|

||||

at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) ~[na:1.8.0_112]

|

||||

at java.lang.reflect.Constructor.newInstance(Constructor.java:423) ~[na:1.8.0_112]

|

||||

at com.alipay.oceanbase.obproxy.mysql.jdbc.Util.handleNewInstance(Util.java:409) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.mysql.jdbc.Util.getInstance(Util.java:384) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.mysql.jdbc.SQLError.createSQLException(SQLError.java:1052) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:4403) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:4275) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.mysql.jdbc.MysqlIO.sendCommand(MysqlIO.java:2706) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.mysql.jdbc.MysqlIO.sqlQueryDirect(MysqlIO.java:2867) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2843) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.mysql.jdbc.PreparedStatement.executeInternal(PreparedStatement.java:2085) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.mysql.jdbc.PreparedStatement.execute(PreparedStatement.java:1310) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.druid.pool.DruidPooledPreparedStatement.execute(DruidPooledPreparedStatement.java:493) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.statement.ObPreparedStatement.executeOnConnection(ObPreparedStatement.java:121) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.statement.ObStatement.doExecuteOnConnection(ObStatement.java:677) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

at com.alipay.oceanbase.obproxy.statement.ObStatement.doExecute(ObStatement.java:558) ~[oceanbase-connector-java-2.0.8.20180730.jar:na]

|

||||

... 8 common frames omitted

|

||||

```

|

||||

可以看到,异常是由于没有insert权限(INSERT command denied to user 'dwexp'@'%' for table)引起的。

|

||||

|

||||

关键字:INSERT command denied to user 'dwexp'@'%'

|

||||

可以看到这个错误是由于没有写入权限导致的,因此在observer的log、obproxy的log中都没有相关的信息。

|

||||

#### 解决方案

|

||||

在ob中给相关用户授权之后,任务重试即可成功。

|

||||

|

||||

参考授权命令为:

|

||||

```sql

|

||||

grant select, insert, update on dbName.tableName to dwexp;

|

||||

grant select on oceanbase.gv$memstore to dwexp;

|

||||

```

|

||||

@ -115,6 +115,11 @@

|

||||

<version>4.11</version>

|

||||

<scope>test</scope>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>com.oceanbase</groupId>

|

||||

<artifactId>obkv-table-client</artifactId>

|

||||

<version>1.4.0</version>

|

||||

</dependency>

|

||||

<dependency>

|

||||

<groupId>com.oceanbase</groupId>

|

||||

<artifactId>obkv-hbase-client</artifactId>

|

||||

|

||||

@ -2,62 +2,86 @@ package com.alibaba.datax.plugin.writer.oceanbasev10writer;

|

||||

|

||||

public interface Config {

|

||||

|

||||

String MEMSTORE_THRESHOLD = "memstoreThreshold";

|

||||

String MEMSTORE_THRESHOLD = "memstoreThreshold";

|

||||

|

||||

double DEFAULT_MEMSTORE_THRESHOLD = 0.9d;

|

||||

double DEFAULT_MEMSTORE_THRESHOLD = 0.9d;

|

||||

|

||||

double DEFAULT_SLOW_MEMSTORE_THRESHOLD = 0.75d;

|

||||

String MEMSTORE_CHECK_INTERVAL_SECOND = "memstoreCheckIntervalSecond";

|

||||

double DEFAULT_SLOW_MEMSTORE_THRESHOLD = 0.75d;

|

||||

|

||||

long DEFAULT_MEMSTORE_CHECK_INTERVAL_SECOND = 30;

|

||||

String MEMSTORE_CHECK_INTERVAL_SECOND = "memstoreCheckIntervalSecond";

|

||||

|

||||

int DEFAULT_BATCH_SIZE = 100;

|

||||

int MAX_BATCH_SIZE = 4096;

|

||||

long DEFAULT_MEMSTORE_CHECK_INTERVAL_SECOND = 30;

|

||||

|

||||

String FAIL_TRY_COUNT = "failTryCount";

|

||||

int DEFAULT_BATCH_SIZE = 100;

|

||||

|

||||

int DEFAULT_FAIL_TRY_COUNT = 10000;

|

||||

int MAX_BATCH_SIZE = 4096;

|

||||

|

||||

String WRITER_THREAD_COUNT = "writerThreadCount";

|

||||

String FAIL_TRY_COUNT = "failTryCount";

|

||||

|

||||

int DEFAULT_WRITER_THREAD_COUNT = 1;

|

||||

int DEFAULT_FAIL_TRY_COUNT = 10000;

|

||||

|

||||

String CONCURRENT_WRITE = "concurrentWrite";

|

||||

String WRITER_THREAD_COUNT = "writerThreadCount";

|

||||

|

||||

boolean DEFAULT_CONCURRENT_WRITE = true;

|

||||

int DEFAULT_WRITER_THREAD_COUNT = 1;

|

||||

|

||||

String OB_VERSION = "obVersion";

|

||||

String TIMEOUT = "timeout";

|

||||

String CONCURRENT_WRITE = "concurrentWrite";

|

||||

|

||||

String PRINT_COST = "printCost";

|

||||

boolean DEFAULT_PRINT_COST = false;

|

||||

boolean DEFAULT_CONCURRENT_WRITE = true;

|

||||

|

||||

String COST_BOUND = "costBound";

|

||||

long DEFAULT_COST_BOUND = 20;

|

||||

String OB_VERSION = "obVersion";

|

||||

|

||||

String MAX_ACTIVE_CONNECTION = "maxActiveConnection";

|

||||

int DEFAULT_MAX_ACTIVE_CONNECTION = 2000;

|

||||

String TIMEOUT = "timeout";

|

||||

|

||||

String WRITER_SUB_TASK_COUNT = "writerSubTaskCount";

|

||||

int DEFAULT_WRITER_SUB_TASK_COUNT = 1;

|

||||

int MAX_WRITER_SUB_TASK_COUNT = 4096;

|

||||

String PRINT_COST = "printCost";

|

||||

|

||||

boolean DEFAULT_PRINT_COST = false;

|

||||

|

||||

String COST_BOUND = "costBound";

|

||||

|

||||

long DEFAULT_COST_BOUND = 20;

|

||||

|

||||

String MAX_ACTIVE_CONNECTION = "maxActiveConnection";

|

||||

|

||||

int DEFAULT_MAX_ACTIVE_CONNECTION = 2000;

|

||||

|

||||

String WRITER_SUB_TASK_COUNT = "writerSubTaskCount";

|

||||

|

||||

int DEFAULT_WRITER_SUB_TASK_COUNT = 1;

|

||||

|

||||

int MAX_WRITER_SUB_TASK_COUNT = 4096;

|

||||

|

||||

String OB_WRITE_MODE = "obWriteMode";

|

||||

|

||||

String OB_WRITE_MODE = "obWriteMode";

|

||||

String OB_COMPATIBLE_MODE = "obCompatibilityMode";

|

||||

|

||||

String OB_COMPATIBLE_MODE_ORACLE = "ORACLE";

|

||||

|

||||

String OB_COMPATIBLE_MODE_MYSQL = "MYSQL";

|

||||

|

||||

String OCJ_GET_CONNECT_TIMEOUT = "ocjGetConnectTimeout";

|

||||

int DEFAULT_OCJ_GET_CONNECT_TIMEOUT = 5000; // 5s

|

||||

String OCJ_GET_CONNECT_TIMEOUT = "ocjGetConnectTimeout";

|

||||

|

||||

String OCJ_PROXY_CONNECT_TIMEOUT = "ocjProxyConnectTimeout";

|

||||

int DEFAULT_OCJ_PROXY_CONNECT_TIMEOUT = 5000; // 5s

|

||||

int DEFAULT_OCJ_GET_CONNECT_TIMEOUT = 5000; // 5s

|

||||

|

||||

String OCJ_CREATE_RESOURCE_TIMEOUT = "ocjCreateResourceTimeout";

|

||||

int DEFAULT_OCJ_CREATE_RESOURCE_TIMEOUT = 60000; // 60s

|

||||

String OCJ_PROXY_CONNECT_TIMEOUT = "ocjProxyConnectTimeout";

|

||||

|

||||

String OB_UPDATE_COLUMNS = "obUpdateColumns";

|

||||

int DEFAULT_OCJ_PROXY_CONNECT_TIMEOUT = 5000; // 5s

|

||||

|

||||

String USE_PART_CALCULATOR = "usePartCalculator";

|

||||

boolean DEFAULT_USE_PART_CALCULATOR = false;

|

||||

String OCJ_CREATE_RESOURCE_TIMEOUT = "ocjCreateResourceTimeout";

|

||||

|

||||

int DEFAULT_OCJ_CREATE_RESOURCE_TIMEOUT = 60000; // 60s

|

||||

|

||||

String OB_UPDATE_COLUMNS = "obUpdateColumns";

|

||||

|

||||

String USE_PART_CALCULATOR = "usePartCalculator";

|

||||

|

||||

boolean DEFAULT_USE_PART_CALCULATOR = false;

|

||||

|

||||

String BLOCKS_COUNT = "blocksCount";

|

||||

|

||||

String DIRECT_PATH = "directPath";

|

||||

|

||||

String RPC_PORT = "rpcPort";

|

||||

|

||||

// 区别于recordLimit,这个参数仅针对某张表。即一张表超过最大错误数不会影响其他表。仅用于旁路导入。

|

||||

String MAX_ERRORS = "maxErrors";

|

||||

}

|

||||

|

||||

@ -0,0 +1,88 @@

|

||||

package com.alibaba.datax.plugin.writer.oceanbasev10writer.common;

|

||||

|

||||

import java.util.Objects;

|

||||

|

||||

public class Table {

|

||||

private String tableName;

|

||||

private String dbName;

|

||||

private Throwable error;

|

||||

private Status status;

|

||||

|

||||

public Table(String dbName, String tableName) {

|

||||

this.dbName = dbName;

|

||||

this.tableName = tableName;

|

||||

this.status = Status.INITIAL;

|

||||

}

|

||||

|

||||

public Throwable getError() {

|

||||

return error;

|

||||

}

|

||||

|

||||

public void setError(Throwable error) {

|

||||

this.error = error;

|

||||

}

|

||||

|

||||

public Status getStatus() {

|

||||

return status;

|

||||

}

|

||||

|

||||

public void setStatus(Status status) {

|

||||

this.status = status;

|

||||

}

|

||||

|

||||

@Override

|

||||

public boolean equals(Object o) {

|

||||

if (this == o) {

|

||||

return true;

|

||||

}

|

||||

if (o == null || getClass() != o.getClass()) {

|

||||

return false;

|

||||

}

|

||||

Table table = (Table) o;

|

||||

return tableName.equals(table.tableName) && dbName.equals(table.dbName);

|

||||

}

|

||||

|

||||

@Override

|

||||

public int hashCode() {

|

||||

return Objects.hash(tableName, dbName);

|

||||

}

|

||||

|

||||

public enum Status {

|

||||

/**

|

||||

*

|

||||

*/

|

||||

INITIAL(0),

|

||||

|

||||

/**

|

||||

*

|

||||

*/

|

||||

RUNNING(1),

|

||||

|

||||

/**

|

||||

*

|

||||

*/

|

||||

FAILURE(2),

|

||||

|

||||

/**

|

||||

*

|

||||

*/

|

||||

SUCCESS(3);

|

||||

|

||||

private int code;

|

||||

|

||||

/**

|

||||

* @param code

|

||||

*/

|

||||

private Status(int code) {

|

||||

this.code = code;

|

||||

}

|

||||

|

||||

public int getCode() {

|

||||

return code;

|

||||

}

|

||||

|

||||

public void setCode(int code) {

|

||||

this.code = code;

|

||||

}

|

||||

}

|

||||

}

|

||||

@ -0,0 +1,21 @@

|

||||

package com.alibaba.datax.plugin.writer.oceanbasev10writer.common;

|

||||

|

||||

import java.util.concurrent.ConcurrentHashMap;

|

||||

|

||||

public class TableCache {

|

||||

private static final TableCache INSTANCE = new TableCache();

|

||||

private final ConcurrentHashMap<String, Table> TABLE_CACHE;

|

||||

|

||||

private TableCache() {

|

||||

TABLE_CACHE = new ConcurrentHashMap<>();

|

||||

}

|

||||

|

||||

public static TableCache getInstance() {

|

||||

return INSTANCE;

|

||||

}

|

||||

|

||||

public Table getTable(String dbName, String tableName) {

|

||||

String fullTableName = String.join("-", dbName, tableName);

|

||||

return TABLE_CACHE.computeIfAbsent(fullTableName, (k) -> new Table(dbName, tableName));

|

||||

}

|

||||

}

|

||||

@ -0,0 +1,257 @@

|

||||

package com.alibaba.datax.plugin.writer.oceanbasev10writer.directPath;

|

||||

|

||||

import java.sql.Array;

|

||||

import java.sql.Blob;

|

||||

import java.sql.CallableStatement;

|

||||

import java.sql.Clob;

|

||||

import java.sql.DatabaseMetaData;

|

||||

import java.sql.NClob;

|

||||

import java.sql.PreparedStatement;

|

||||

import java.sql.SQLClientInfoException;

|

||||

import java.sql.SQLException;

|

||||

import java.sql.SQLWarning;

|

||||

import java.sql.SQLXML;

|

||||

import java.sql.Savepoint;

|

||||

import java.sql.Statement;

|

||||

import java.sql.Struct;

|

||||

import java.util.Map;

|

||||

import java.util.Properties;

|

||||

import java.util.concurrent.Executor;

|

||||

|

||||

public abstract class AbstractRestrictedConnection implements java.sql.Connection {

|

||||

|

||||

@Override

|

||||

public CallableStatement prepareCall(String sql) throws SQLException {

|

||||

throw new UnsupportedOperationException("prepareCall(String) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public String nativeSQL(String sql) throws SQLException {

|

||||

throw new UnsupportedOperationException("nativeSQL(String) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setAutoCommit(boolean autoCommit) throws SQLException {

|

||||

throw new UnsupportedOperationException("setAutoCommit(boolean) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public boolean getAutoCommit() throws SQLException {

|

||||

throw new UnsupportedOperationException("getAutoCommit is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void abort(Executor executor) throws SQLException {

|

||||

throw new UnsupportedOperationException("abort(Executor) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setNetworkTimeout(Executor executor, int milliseconds) throws SQLException {

|

||||

throw new UnsupportedOperationException("setNetworkTimeout(Executor, int) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public int getNetworkTimeout() throws SQLException {

|

||||

throw new UnsupportedOperationException("getNetworkTimeout is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public DatabaseMetaData getMetaData() throws SQLException {

|

||||

throw new UnsupportedOperationException("getMetaData is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setReadOnly(boolean readOnly) throws SQLException {

|

||||

throw new UnsupportedOperationException("setReadOnly(boolean) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public boolean isReadOnly() throws SQLException {

|

||||

throw new UnsupportedOperationException("isReadOnly is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setCatalog(String catalog) throws SQLException {

|

||||

throw new UnsupportedOperationException("setCatalog(String) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public String getCatalog() throws SQLException {

|

||||

throw new UnsupportedOperationException("getCatalog is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setTransactionIsolation(int level) throws SQLException {

|

||||

throw new UnsupportedOperationException("setTransactionIsolation(int) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public int getTransactionIsolation() throws SQLException {

|

||||

throw new UnsupportedOperationException("getTransactionIsolation is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public SQLWarning getWarnings() throws SQLException {

|

||||

throw new UnsupportedOperationException("getWarnings is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void clearWarnings() throws SQLException {

|

||||

throw new UnsupportedOperationException("clearWarnings is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public Statement createStatement(int resultSetType, int resultSetConcurrency) throws SQLException {

|

||||

throw new UnsupportedOperationException("createStatement(int, int) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public PreparedStatement prepareStatement(String sql, int resultSetType, int resultSetConcurrency) throws SQLException {

|

||||

throw new UnsupportedOperationException("prepareStatement(String, int, int) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public CallableStatement prepareCall(String sql, int resultSetType, int resultSetConcurrency) throws SQLException {

|

||||

throw new UnsupportedOperationException("prepareCall(String, int, int) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public Map<String, Class<?>> getTypeMap() throws SQLException {

|

||||

throw new UnsupportedOperationException("getTypeMap is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setTypeMap(Map<String, Class<?>> map) throws SQLException {

|

||||

throw new UnsupportedOperationException("setTypeMap(Map<String, Class<?>>) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setHoldability(int holdability) throws SQLException {

|

||||

throw new UnsupportedOperationException("setHoldability is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public int getHoldability() throws SQLException {

|

||||

throw new UnsupportedOperationException("getHoldability is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public Savepoint setSavepoint() throws SQLException {

|

||||

throw new UnsupportedOperationException("setSavepoint is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public Savepoint setSavepoint(String name) throws SQLException {

|

||||

throw new UnsupportedOperationException("setSavepoint(String) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void rollback(Savepoint savepoint) throws SQLException {

|

||||

throw new UnsupportedOperationException("rollback(Savepoint) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void releaseSavepoint(Savepoint savepoint) throws SQLException {

|

||||

throw new UnsupportedOperationException("releaseSavepoint(Savepoint) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public Statement createStatement(int resultSetType, int resultSetConcurrency, int resultSetHoldability) throws SQLException {

|

||||

throw new UnsupportedOperationException("createStatement(int, int, int) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public PreparedStatement prepareStatement(String sql, int resultSetType, int resultSetConcurrency, int resultSetHoldability) throws SQLException {

|

||||

throw new UnsupportedOperationException("prepareStatement(String, int, int, int) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public CallableStatement prepareCall(String sql, int resultSetType, int resultSetConcurrency, int resultSetHoldability) throws SQLException {

|

||||

throw new UnsupportedOperationException("prepareCall(String, int, int, int) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public PreparedStatement prepareStatement(String sql, int autoGeneratedKeys) throws SQLException {

|

||||

throw new UnsupportedOperationException("prepareStatement(String, int) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public PreparedStatement prepareStatement(String sql, int[] columnIndexes) throws SQLException {

|

||||

throw new UnsupportedOperationException("prepareStatement(String, int[]) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public PreparedStatement prepareStatement(String sql, String[] columnNames) throws SQLException {

|

||||

throw new UnsupportedOperationException("prepareStatement(String, String[]) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public Clob createClob() throws SQLException {

|

||||

throw new UnsupportedOperationException("createClob is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public Blob createBlob() throws SQLException {

|

||||

throw new UnsupportedOperationException("createBlob is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public NClob createNClob() throws SQLException {

|

||||

throw new UnsupportedOperationException("createNClob is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public SQLXML createSQLXML() throws SQLException {

|

||||

throw new UnsupportedOperationException("createSQLXML is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public boolean isValid(int timeout) throws SQLException {

|

||||

throw new UnsupportedOperationException("isValid(int) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setClientInfo(String name, String value) throws SQLClientInfoException {

|

||||

throw new UnsupportedOperationException("setClientInfo(String, String) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setClientInfo(Properties properties) throws SQLClientInfoException {

|

||||

throw new UnsupportedOperationException("setClientInfo(Properties) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public String getClientInfo(String name) throws SQLException {

|

||||

throw new UnsupportedOperationException("getClientInfo(String) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public Properties getClientInfo() throws SQLException {

|

||||

throw new UnsupportedOperationException("getClientInfo is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public Array createArrayOf(String typeName, Object[] elements) throws SQLException {

|

||||

throw new UnsupportedOperationException("createArrayOf(String, Object[]) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public Struct createStruct(String typeName, Object[] attributes) throws SQLException {

|

||||

throw new UnsupportedOperationException("createStruct(String, Object[]) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setSchema(String schema) throws SQLException {

|

||||

throw new UnsupportedOperationException("setSchema(String) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public <T> T unwrap(Class<T> iface) throws SQLException {

|

||||

throw new UnsupportedOperationException("unwrap(Class<T>) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public boolean isWrapperFor(Class<?> iface) throws SQLException {

|

||||

throw new UnsupportedOperationException("isWrapperFor(Class<?>) is unsupported");

|

||||

}

|

||||

}

|

||||

@ -0,0 +1,663 @@

|

||||

package com.alibaba.datax.plugin.writer.oceanbasev10writer.directPath;

|

||||

|

||||

import java.io.InputStream;

|

||||

import java.io.Reader;

|

||||

import java.math.BigDecimal;

|

||||

import java.math.BigInteger;

|

||||

import java.net.URL;

|

||||

import java.nio.charset.Charset;

|

||||

import java.sql.Array;

|

||||

import java.sql.Blob;

|

||||

import java.sql.Clob;

|

||||

import java.sql.Date;

|

||||

import java.sql.NClob;

|

||||

import java.sql.ParameterMetaData;

|

||||

import java.sql.Ref;

|

||||

import java.sql.ResultSet;

|

||||

import java.sql.ResultSetMetaData;

|

||||

import java.sql.RowId;

|

||||

import java.sql.SQLException;

|

||||

import java.sql.SQLWarning;

|

||||

import java.sql.SQLXML;

|

||||

import java.sql.Time;

|

||||

import java.sql.Timestamp;

|

||||

import java.time.Instant;

|

||||

import java.time.LocalDate;

|

||||

import java.time.LocalDateTime;

|

||||

import java.time.LocalTime;

|

||||

import java.time.OffsetDateTime;

|

||||

import java.time.OffsetTime;

|

||||

import java.time.ZonedDateTime;

|

||||

import java.util.Calendar;

|

||||

import java.util.List;

|

||||

|

||||

import com.alipay.oceanbase.rpc.protocol.payload.impl.ObObj;

|

||||

import com.alipay.oceanbase.rpc.protocol.payload.impl.ObObjType;

|

||||

import com.alipay.oceanbase.rpc.util.ObVString;

|

||||

import org.apache.commons.io.IOUtils;

|

||||

|

||||

public abstract class AbstractRestrictedPreparedStatement implements java.sql.PreparedStatement {

|

||||

|

||||

private boolean closed;

|

||||

|

||||

@Override

|

||||

public void setNull(int parameterIndex, int sqlType) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(null));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setNull(int parameterIndex, int sqlType, String typeName) throws SQLException {

|

||||

throw new UnsupportedOperationException("setNull(int, int, String) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setBoolean(int parameterIndex, boolean x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setByte(int parameterIndex, byte x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setShort(int parameterIndex, short x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setInt(int parameterIndex, int x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setLong(int parameterIndex, long x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setFloat(int parameterIndex, float x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setDouble(int parameterIndex, double x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setBigDecimal(int parameterIndex, BigDecimal x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setString(int parameterIndex, String x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setBytes(int parameterIndex, byte[] x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setDate(int parameterIndex, Date x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setDate(int parameterIndex, Date x, Calendar cal) throws SQLException {

|

||||

throw new UnsupportedOperationException("setDate(int, Date, Calendar) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setTime(int parameterIndex, Time x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setTime(int parameterIndex, Time x, Calendar cal) throws SQLException {

|

||||

throw new UnsupportedOperationException("setTime(int, Time, Calendar) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setTimestamp(int parameterIndex, Timestamp x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setTimestamp(int parameterIndex, Timestamp x, Calendar cal) throws SQLException {

|

||||

throw new UnsupportedOperationException("setTimestamp(int, Timestamp, Calendar) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setObject(int parameterIndex, Object x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setObject(int parameterIndex, Object x, int targetSqlType) throws SQLException {

|

||||

throw new UnsupportedOperationException("setObject(int, Object, int) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setObject(int parameterIndex, Object x, int targetSqlType, int scaleOrLength) throws SQLException {

|

||||

throw new UnsupportedOperationException("setObject(int, Object, int, int) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setRef(int parameterIndex, Ref x) throws SQLException {

|

||||

throw new UnsupportedOperationException("setRef(int, Ref) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setArray(int parameterIndex, Array x) throws SQLException {

|

||||

throw new UnsupportedOperationException("setArray(int, Array) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setSQLXML(int parameterIndex, SQLXML xmlObject) throws SQLException {

|

||||

throw new UnsupportedOperationException("setSQLXML(int, SQLXML) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setURL(int parameterIndex, URL x) throws SQLException {

|

||||

// if (x == null) {

|

||||

// this.setParameter(parameterIndex, createObObj(x));

|

||||

// } else {

|

||||

// // TODO If need BackslashEscapes and character encoding ?

|

||||

// this.setParameter(parameterIndex, createObObj(x.toString()));

|

||||

// }

|

||||

throw new UnsupportedOperationException("setURL(int, URL) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setRowId(int parameterIndex, RowId x) throws SQLException {

|

||||

throw new UnsupportedOperationException("setRowId(int, RowId) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setNString(int parameterIndex, String value) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(value));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setBlob(int parameterIndex, Blob x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setBlob(int parameterIndex, InputStream x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setBlob(int parameterIndex, InputStream x, long length) throws SQLException {

|

||||

throw new UnsupportedOperationException("setBlob(int, InputStream, length) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setClob(int parameterIndex, Clob x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setClob(int parameterIndex, Reader x) throws SQLException {

|

||||

this.setCharacterStream(parameterIndex, x);

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setClob(int parameterIndex, Reader x, long length) throws SQLException {

|

||||

throw new UnsupportedOperationException("setClob(int, Reader, length) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setNClob(int parameterIndex, NClob x) throws SQLException {

|

||||

this.setClob(parameterIndex, (Clob) (x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setNClob(int parameterIndex, Reader x) throws SQLException {

|

||||

this.setClob(parameterIndex, x);

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setNClob(int parameterIndex, Reader x, long length) throws SQLException {

|

||||

throw new UnsupportedOperationException("setNClob(int, Reader, length) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setAsciiStream(int parameterIndex, InputStream x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Deprecated

|

||||

@Override

|

||||

public void setUnicodeStream(int parameterIndex, InputStream x, int length) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setAsciiStream(int parameterIndex, InputStream x, int length) throws SQLException {

|

||||

throw new UnsupportedOperationException("setAsciiStream(int, InputStream, length) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setAsciiStream(int parameterIndex, InputStream x, long length) throws SQLException {

|

||||

throw new UnsupportedOperationException("setAsciiStream(int, InputStream, length) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setBinaryStream(int parameterIndex, InputStream x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setBinaryStream(int parameterIndex, InputStream x, int length) throws SQLException {

|

||||

throw new UnsupportedOperationException("setBinaryStream(int, InputStream, length) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setBinaryStream(int parameterIndex, InputStream x, long length) throws SQLException {

|

||||

throw new UnsupportedOperationException("setBinaryStream(int, InputStream, length) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setCharacterStream(int parameterIndex, Reader x) throws SQLException {

|

||||

this.setParameter(parameterIndex, createObObj(x));

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setCharacterStream(int parameterIndex, Reader x, int length) throws SQLException {

|

||||

throw new UnsupportedOperationException("setCharacterStream(int, InputStream, length) is unsupported");

|

||||

}

|

||||

|

||||

@Override

|

||||

public void setCharacterStream(int parameterIndex, Reader x, long length) throws SQLException {

|

||||